The HTTP Data Recorder Service is a generic data ingestion service which allows a client to send data to the Insights backend.

All raw data is kept stored in the system. The upload of new data triggers the processing of that data which results in a new processed data set which is stored separately from the input data.

The target project and content-type of the data needs to be given. The service accepts any content-type in general (such as JSON, XML or ZIP), but it is the responsibility of the project specific processor to handle the input correctly.

We recommend you to use preemptive authentication. That way, the basic authentication request is sent before the server returns an unauthorized response. Also refer to the Apache documentation.

Data Recorder Service - base URL

Your application will need to address following endpoint.

https://bosch-iot-insights.com/data-recorder-service

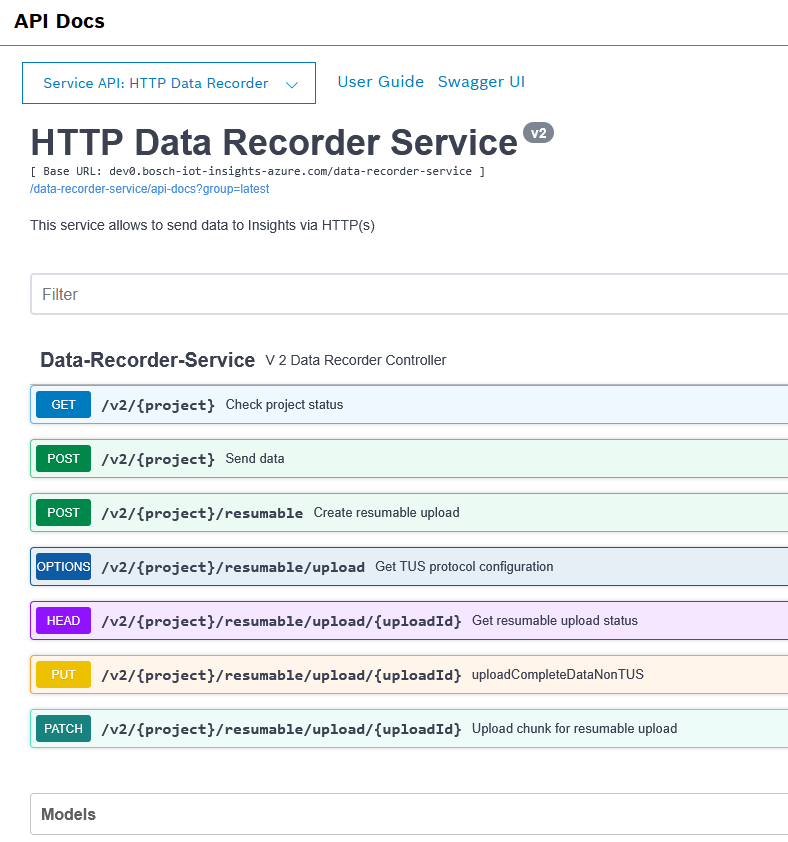

Data Recorder Service - Swagger UI

To interactively try out the API, you can use the following entry point

https://bosch-iot-insights.com/ui/pages/api/data-recorder/latest

Example

The following HTTP POST request shows an example request for a project with name 'demo', using the basic authentication of the credential foo:bar and sending JSON content.

HTTP POST https://bosch-iot-insights.com/data-recorder-service/v2/demoHeader:Content-Type: application/jsonAuthorization: Basic Zm9vOmJhcg==Body:{ "hello" : "world"}Resumable upload

The resumable upload improves the efficiency of the system and is also convenient for the customer in case of potential connection interruptions, etc.. In such a case the upload can be continued from where it stopped, and not from the very beginning.

The Data Recorder Service allows for resumable upload in accordance with the TUS protocol (also spelled tus). For the protocol specification, refer to tus.io.

We recommend that you use the standard client implementations by the protocol itself, as provided at https://tus.io/implementations.

For such an upload, you will need the following endpoints of the Data Recorder Service API:

POST- to send the initial parameters, e.g. metadata, total upload length, etc.PATCH- to start the actual upload in chunks based on the information from the HEAD requestsHEAD- to check the upload progress in bytes, in particular this will be the returnedUpload-Offsetparameter valueOPTIONS- to check the supported TUS protocol version

You can find detailed endpoint descriptions in the API documentation itself, e.g. at https://bosch-iot-insights.com/ui/pages/api/data-recorder/latest.

Sequence of steps

Generally, this is what you can expect as a sequence of steps:

With the POST request, you will:

- create the metadata of the upload

- provide the expected total

Content-Lengthof the upload - alternatively, use the

Upload-Defer-Lengthparameter in order to provide the expected total upload length later on, namely in the first PATCH request - receive an upload URL with an auto-generated token

- receive the input data ID

- receive the metadata expiry time (see also the limitations section below)

- receive the collection name where the metadata will be stored

After the POST request, you will send one or several PATCH requests to fulfill the data upload itself in the respective chunk sizes. Normally, the system continues with the next chunk automatically. In case of connection interruptions, however, for every chunk you will need to use the response from the HEAD request, and especially the returned value of the Upload-Offset parameter.

When the expected total upload length is reached, the metadata is deleted, the changes are officially committed and the input data for processing by the respective pipeline is created.

Limitations

You can upload data in chunks sized between 1 MB and 100 MB.

The maximum total upload size per resumable upload is 50 GB. However, you can fulfill as many resumable uploads as needed.

The metadata is stored in the database for 5 minutes and expires unless there is a successful PATCH request in the meantime. The metadata validity is increased by 5 more minutes with every new uploaded chunk.

Disambiguation: Please, note that there is a PUT endpoint, namely PUT/v2/{project}/resumable/upload/{uploadId}, with which you will only use the token generated by the POST resumable endpoint, but you will actually upload the complete data in one chunk. Do not use this endpoint if you want to fulfill a resumable upload scenario.